AI Gateway

Introducing AI Gateway

As AI adoption accelerates, applications are evolving beyond basic LLM calls into complex, multi-actor systems-including user apps, agents, orchestration layers, and context servers that interact with foundation models in real time.

To support this shift, developers are adopting protocols like Model Context Protocol (MCP) and Agent2Agent (A2A) to standardize how components exchange tools, data, and decisions.

But infrastructure often falls behind, with challenges around authentication, rate limiting, data security, observability, and constant provider changes.

AI Gateway addresses these challenges with a high-performance control plane that secures, governs, and observes AI-native systems end to end. Whether serving LLM traffic, exposing structured context via MCP, or coordinating agents through A2A, AI Gateway ensures scalable, secure, and reliable AI infrastructure.

Quickstart

Sign up for Konnect to get started with AI Gateway.

Or, launch a demo instance of AI Gateway running on-prem:

curl -Ls https://get.konghq.com/ai | bash

AI Gateway providers

Kong AI Gatewy routes AI requests to various providers through a provider-agnostic API. This normalized API layer provides multiple benefits: client applications stay decoupled from provider-specific APIs, credentials are managed centrally, and request routing can be dynamic to optimize for cost, latency, or availability.

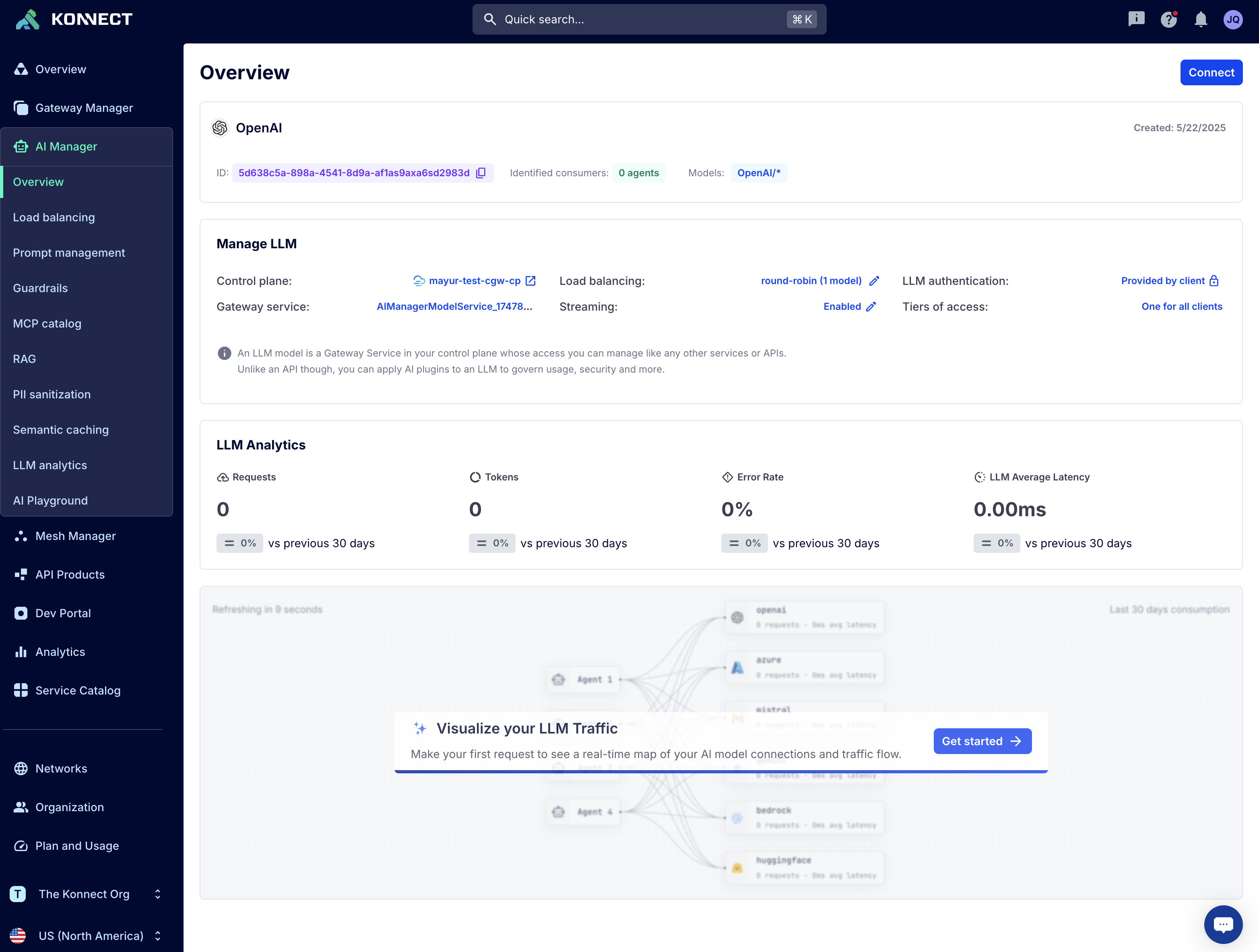

AI Gateway in Konnect

Konnect provides a unified control plane to create, manage, and monitor LLMs using the Konnect platform.

Key features include:

- Routing and load balancing: Assign Gateway Services and define how traffic is distributed across models.

- Streaming and authentication: Enable streaming responses and manage authentication through the AI Gateway.

- Access control: Create and apply access tiers to control how clients interact with LLMs.

- Usage analytics: Monitor request and token volumes, track error rates, and measure average latency with historical comparisons.

- Visual traffic maps: Explore interactive maps that show how requests flow between clients and models in real time.

Deployment checklist

- AI Gateway resource sizing guidelines: Review recommended resource allocation guidelines for AI Gateway.

- Deployment topologies: Learn about the different ways to deploy Kong Gateway.

- Hosting options: Decide where you want to host your Data Plane nodes, and whether you want Kong to host them or host them yourself.

Tools to manage AI Gateway

- AI Gateway editor: GUI for managing all your AI Gateway resources in one place.

- decK: Manage AI Gateway and Kong Gateway configuration through declarative state files.

- Terraform: Manage infrastructure as code and automated deployments to streamline setup and configuration of Konnect and Kong Gateway.

- KIC: Manage ingress traffic and routing rules for your services.

- Kong Gateway Admin API: Manage on-prem Kong Gateway entities via an API.

- Control Plane Config API: Manage Kong Gateway entities within Konnect Control Planes via an API.

AI Gateway capabilities

You can enable the AI Gateway features through a set of modern and specialized plugins, using the same model you use for any other Kong Gateway plugin. When deployed alongside existing Kong Gateway plugins, Kong Gateway users can quickly assemble a sophisticated AI management platform without custom code or deploying new and unfamiliar tools.

Universal API

Kong’s AI Gateway Universal API, delivered through the AI Proxy and AI Proxy Advanced plugins, simplifies AI model integration by providing a single, standardized interface for interacting with models across multiple providers.

-

Easy to use: Configure once and access any AI model with minimal integration effort.

-

Load balancing: Automatically distribute AI requests across multiple models or providers for optimal performance and cost efficiency.

-

Retry and fallback: Optimize AI requests based on model performance, cost, or other factors.

-

Cross-plugin integration: Leverage AI in non-AI API workflows through other Kong Gateway plugins.

AI Usage Governance

As AI technologies see broader adoption, developers and organizations face new risks: the risk of sensitive data leaking to AI providers, which exposes businesses and their customers to potential breaches and security threats.

Managing how data flows to and from AI models has become critical not just for security, but also for compliance and reliability. Without the right controls in place, organizations risk losing visibility into how AI is used across their systems.

- Data governance: Control how sensitive information is handled and shared with AI models.

- Prompt engineering: Customize and optimize prompts to deliver consistent, high-quality AI outputs.

- Guardrails and content safety: Enforce policies to prevent inappropriate, unsafe, or non-compliant responses.

- Automated RAG injection: Seamlessly inject relevant, vetted data into AI prompts without manual RAG implementations.

- Load balancing: Distribute AI traffic efficiently across multiple model endpoints to ensure performance and reliability.

- LLM cost control: Use the AI Compressor, RAG Injector, and Prompt Decorator to compress and structure prompts efficiently. Combine with AI Proxy Advanced to route requests across OpenAI models by semantic similarity—optimizing for cost and performance.

Data governance

AI Gateway enforces governance on outgoing AI prompts through allow/deny lists, blocking unauthorized requests with 4xx responses. It also provides built-in PII sanitization, automatically detecting and redacting sensitive data across 20 categories and 9 languages. Running privately and self-hosted for full control and compliance, AI Gateway ensures consistent protection without burdening developers, which helps simplify AI adoption at scale.

Prompt engineering

AI systems are built around prompts, and manipulating those prompts is important for successful adoption of the technologies. Prompt engineering is the methodology of manipulating the linguistic inputs that guide the AI system. AI Gateway supports a set of plugins that allow you to create a simplified and enhanced experience by setting default prompts or manipulating prompts from clients as they pass through the gateway.

Guardrails and content safety

As a platform owner, you may need to moderate all user request content against reputable services to comply with specific sensitive categories when proxying Large Language Model (LLM) traffic. AI Gateway provides built-in capabilities to handle content moderation and ensure content safety, that help you enforce compliance and protect your users across AI-powered applications.

Request transformations

AI Gateway allows you to use AI technology to augment other API traffic. One example is routing API responses through an AI language translation prompt before returning it to the client. AI Gateway provides two plugins that can be used in conjunction with other upstream API services to weave AI capabilities into API request processing. These plugins can be configured independently of the AI Proxy plugin.

Automated RAG

LLMs are only as reliable as the data they can access. When faced with incomplete information, they often produce confident yet incorrect responses known as “hallucinations.” These hallucinations occur when LLMs lack the necessary domain knowledge.To address this, developers use the Retrieval-augmented Generation (RAG) approach, which enriches models with relevant data pulled from vector databases.

While standard RAG workflows are resource-heavy, as they require teams to generate embeddings and manually curate them in vector databases, Kong’s AI RAG Injector plugin automates this entire process. Instead of embedding RAG logic into every application individually, platform teams can inject vetted data into prompts directly at the gateway layer without any manual interventions.

Load balancing

AI Gateway’s load balancer routes requests across AI models to optimize for speed, cost, and reliability. It supports algorithms like consistent hashing, lowest-latency, usage-based, round-robin, and semantic matching, with built-in retries and fallback for resilience v3.10+. The balancer dynamically selects models based on real-time performance and prompt relevance, and works across mixed environments including OpenAI, Mistral, and Llama models.

LLM cost control

The AI Gateway helps reduce LLM usage costs by giving you control over how prompts are built and routed. You can compress and structure prompts efficiently using the AI Compressor, RAG Injector, and AI Prompt Decorator plugins. For further savings, you can use AI Proxy Advanced to route requests across OpenAI models based on semantic similarity.

Observability and metrics

AI Gateway provides multiple approaches to monitor LLM traffic and operations. Track token usage, latency, and costs through audit logs and metrics exporters. Instrument request flows with OpenTelemetry to trace prompts and responses across your infrastructure. Use Konnect Advanced Analytics for pre-built dashboards, or integrate with your existing observability stack.

How-to Guides

- Authenticate OpenAI SDK clients with Key Authentication in Kong AI Gateway View →

- Send batch requests to Azure OpenAI LLMs View →

- Control accuracy of LLM models using the AI LLM as judge plugin View →

- Control prompt size with the AI Compressor plugin View →

- Guide survey classification behavior using the AI Prompt Decorator plugin View →

- View More →

Frequently Asked Questions

Is AI Gateway available for all deployment modes?

Yes, AI plugins are supported in all deployment modes, including Konnect, self-hosted traditional, hybrid, and DB-less, and on Kubernetes via the Kong Ingress Controller.

Why should I use AI Gateway instead of adding the LLM’s API behind Kong Gateway?

If you just add an LLM’s API behind Kong Gateway, you can only interact at the API level with internal traffic. With AI plugins, Kong Gateway can understand the prompts that are being sent through the gateway. The plugins can inspect the body and provide more specific AI capabilities to your traffic.